Standards and best practices are tools that UX designers rely on, to help us focus our work and ensure we design for the end-user, and not just for ourselves. The better we understand these standards, the stronger our UX abilities: that’s why the International Organization for Standardization (ISO) has created a set of common user experience standards, called ISO 9241. These standards have been developed by industry leaders through validated research, and are intended to help designers use the best methods to generate findings we can rely on.

In the first part of our article we looked at three parts of ISO 9241, including the definition of usability, its dimensions and quality aspects. We also saw how norms on user guidance, presentation of information, and dialogue techniques can support us in creating usable interactive products. Part two of the article will focus on the areas of ISO 9241 that are related to processes for usability engineering, especially in the context of Human Centered Design.

Part 210: Human Centered Design and User Experience

Part 210 of ISO 9241, otherwise known as “Human-centered design for interactive systems,” explains how to run an iterative design project from start to finish. It provides requirements and recommendations for human-centered design principles and activities throughout the life cycle of computer-based interactive systems.

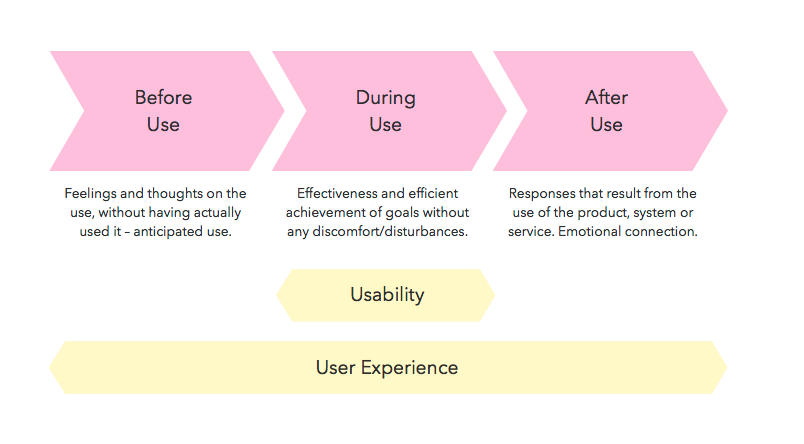

It also helps us to separate out UX from usability. In our last article we focused on usability, but Part 210 defines the user experience. For example, if

a user interacts with the same software on Mac and PC in order to reach the same goals, the usability will probably be pretty much the same on both

platforms, (assuming the user interface and processes remain unchanged), but the user experience might be different. The user group on the Mac might feel

better and have a more positive attitude towards using the software than the users on the PC, and thus have a better perceived user experience.

Figure 4: Relationship between Usability and User Experience. Source: Adapted ProContext Consulting GmbH 2010 Distinguishing Usability and User Experience

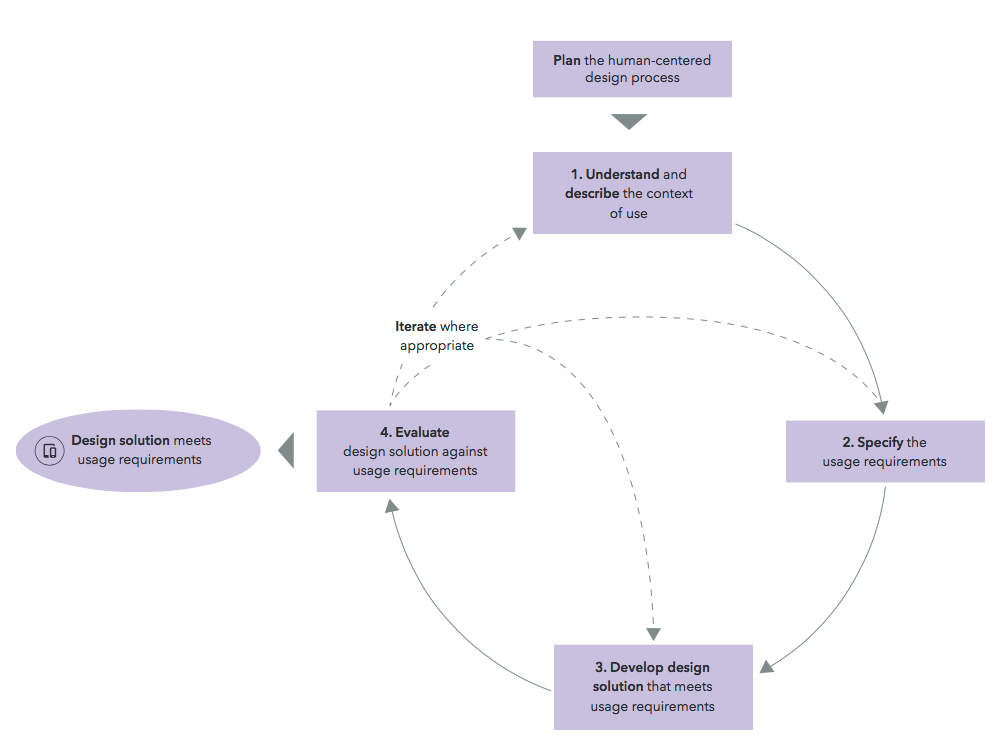

The human-centered design process described in Part 210 supports an iterative user-oriented approach, made up of the following phases (Figure 5):

- Phase 1: Understand and specify the context of use

- Phase 2: Specify the usage requirements

- Phase 3: Develop design solution that meets usage requirements

- Phase 4: Evaluate design solution against usage requirements

Figure 5: Human-centered design process for interactive systems. Adapted from DIN EN ISO 9241-210

Let’s review each phase in more detail.

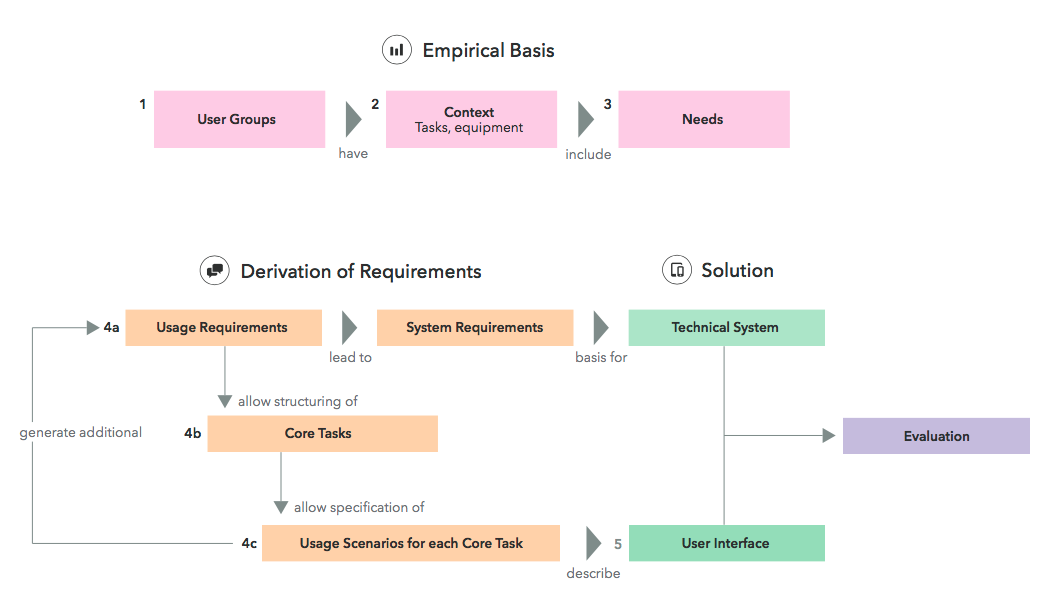

Phase 1: The context of use consists of user characteristics, tasks, and equipment as well as the physical and social environment in which the product is used. The UX team needs to identify all of these for each target user group, since each user group may have different needs for the product.

We can use evaluation methods like interviews or diary studies to understand the context of use. Based on what we learn about how people may use the system, we can then determine the usage requirements, which brings us to phase two.

Phase 2: The usage requirements are derived from the tasks that the user performs during a contextual scenario. In other words, usage requirements describe which actions a user should be able to perform while interacting with the system; they go hand in hand with the requirements for the system. In phase 2 we define those requirements for the system, based on what the user needs to do within the context of use.

Phase 3: Next, we design a solution to meet all the usage requirements. The design might be a low fidelity prototype in the first few iterations, or a design that is very close to the final product, depending on what is needed for testing.

Figure 6: Connection between user groups, context, needs and usage scenarios.

Phase 4: Finally, we can evaluate the design solution from the user’s perspective. In other words, we usability test. We can do this as part of an expert evaluation, in which usability experts perform a scenario-based walkthrough, or in an empirical evaluation, where members of the target audience attempt to use the system.

Now, let’s see how this works in practice.

Usability Evaluation Case Study – the HNU website

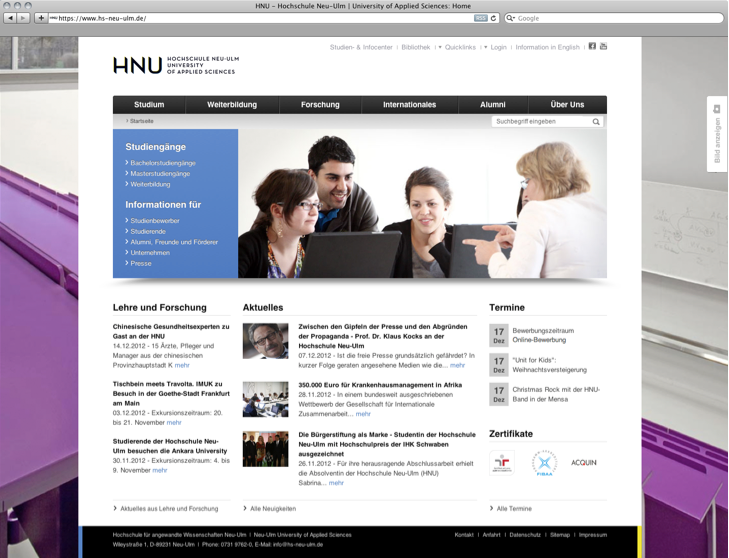

Figure 8: Homepage of the HNU-Website. Source: www.hs-neu-ulm.de

Figure 9: Subpage of the HNU Website “Studies.”

In Part 1, we introduced readers to the HNU – Neu-Ulm University of Applied Sciences, where we work. We’ll be referencing the same redesign of our university’s website now, to continue the case study.

When we relaunched the university’s website, we applied a human-centered design process, following the recommendations of ISO 9241-210, and conducted usability evaluations during the design cycles.

We began with Phase 1, where we identified the context of use for different user groups, such as students using the university website. We observed students using the current site and noted that their tasks ranged from simple things, like finding the daily menu, to more complex tasks such as registering for courses. We noted how often they accomplished these tasks at home versus at the university, and when they used laptops versus smartphones. This helped us understand the context in which they interacted with the site.

Then we moved on to Phase 2, where we defined usage requirements. For example, in the scenario “student conducts research for paper,” we identified that the student needed to be able to find relevant books for their paper topic. We noted all requirements like this, for each scenario, focusing on the student’s point of view rather than the technical implementation required.

Next, we began to design a solution based on the usage requirements: Phase 3. In our case, we created a prototype of the homepage that allowed students to accomplish the goals in their primary scenarios, including the one’s we’ve mentioned: looking up the daily menu, registering for classes, and researching a paper. We paid special attention to making the homepage reflect the student’s’ perspective, rather than our own. Since students (for example) spent just as much time thinking about their lunch items as their work assignments, we needed to make sure both were equally accessible, even if we, as researchers, thought the homework assignments, like those can be done on 留学生作业代写, were more important.

Lastly, we moved into Phase 4, and evaluated the website to validate our work and see the user’s reactions. The aim was to check:

- the completeness and accuracy with which the user reached certain goals (i.e. effectiveness, ISO 9241-110).

- How much effort (i.e. efficiency) it required for the user to reach these goals (ISO 9241-110).

- How free from disturbances, and how positive an attitude the user maintained towards the website (i.e. satisfaction, ISO 9241-11).

We chose a few scenarios that would best cover the most important tasks for the students, such as:

You are at home and started to write a paper for one of your courses on Healthcare Management. Please go to the HNU website and find the latest books on the topic that are in stock at the university’s library and do a reservation for one of them.

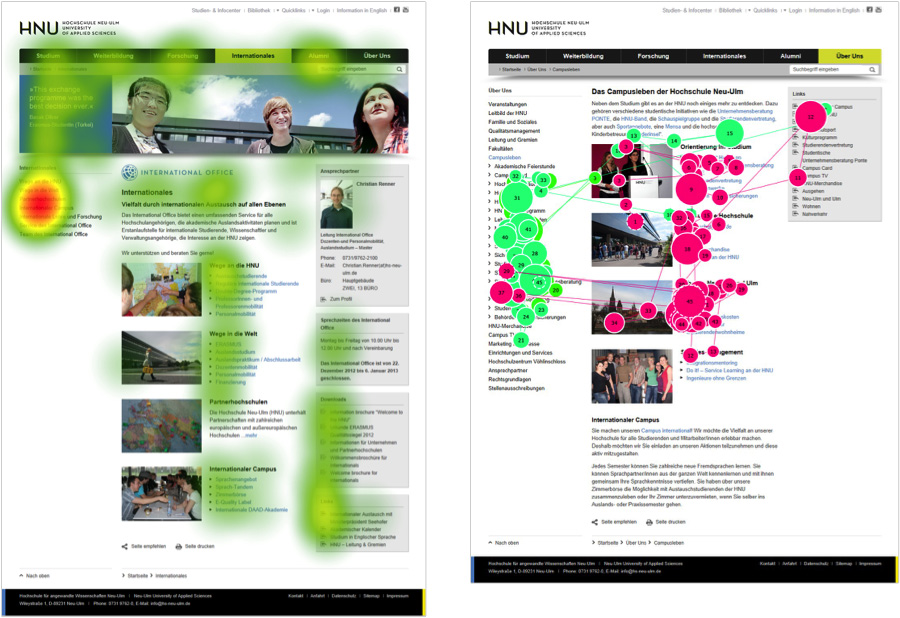

We ran the test using Tobii studio, a piece of software that allowed us to conduct a live test within the browser, so our participants could use the website in its natural environment. Tobii studio also tracks user-interaction throughout the test and helped us to analyze the data afterwards. We were able to study the user’s visual attention with our screen-based eye-tracker, and we asked users to think aloud during the test, to help us hear their thought process and gain insights about their expectations and level of satisfaction.

Figure 11: Test room with stationary eye tracker T60 in UX Lab of the HNU. On the left there is the observation room with a one-way mirror that is only visible if you dim the light in the test room.

When watching students attempt to find books to write a paper, we noticed that some of our participants had problems finding the library’s subsite under the right main-menu option. This is a violation of the ISO 9241-110 dialogue principle of self-descriptiveness. We realized we needed to improve the labeling of the main menu, so the user could better find the library (a high-priority area) on the site.

Figure 12: Heat map and gaze plot view of the HNU website.

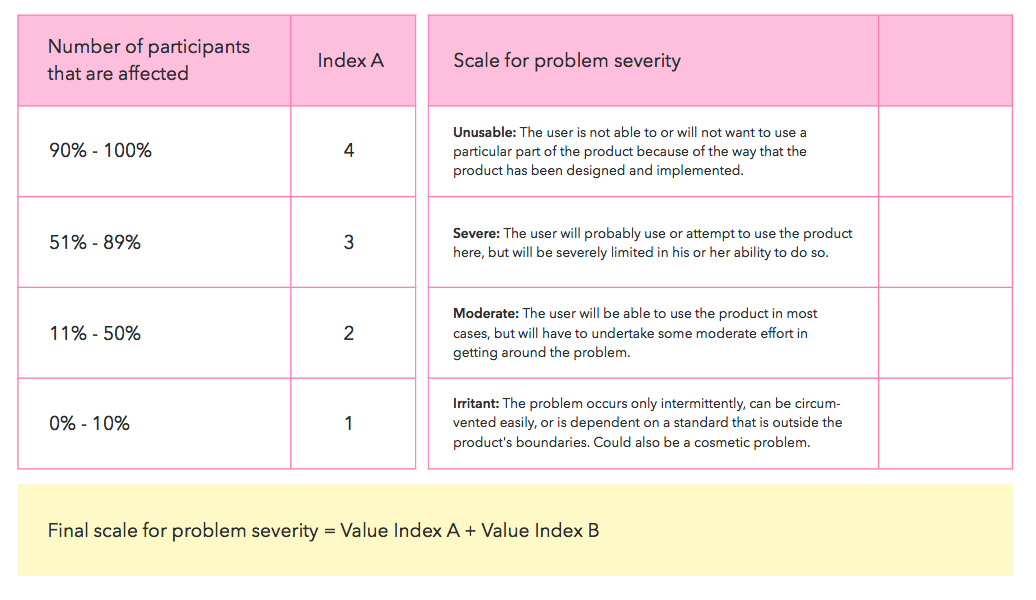

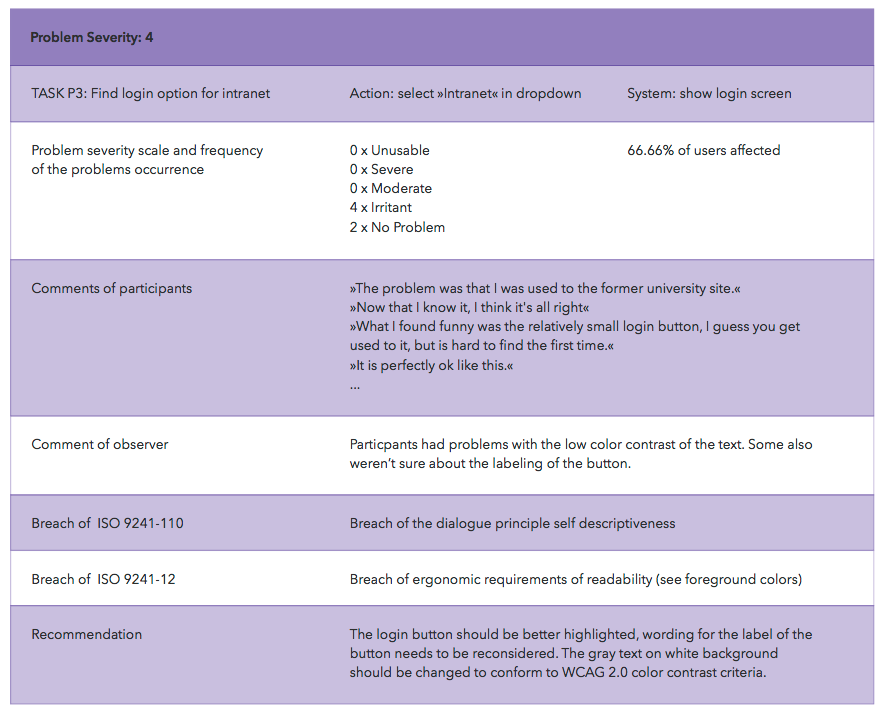

As we analyzed the results of the usability test, we found many more breaches of the ISO standards. We categorized them by the dialogue principles, and prioritized them by combining Jeff Rubin’s problem severity scale with the frequency of the problem’s occurrence. The higher the degree of the usability problem, the more urgent was its elimination. We then examined each usability problem, and recommended design solutions to fix them based on feedback by participants.

Figure 15: The final scale for problem severity is a combination of the index for the number of affected participants and the problem severity scale from Rubin. E.g. if 70% of the users had a problem during the test (Index A = 3) and this problem stopped them completely from using the system (Index B = 4) we get a final problem severity of 7 which is really high and probably leads to a key finding of the test.

Figure 16: An example of one of our findings as we present it in the test report.

Based on the study, we were able to derive appropriate design solutions and improve the HNU website. These recommendations formed the groundwork for our next design cycle in an on-going human-centered design process.

Final Thoughts and Next Steps

We hope our article has inspired other designers to think about ways to include standards for UX processes in their work. To learn more please refer to the resources below!

- There are several institutions that provide training on UX standards, such as Fraunhofer or HCD Institute where Prof. Danny Franzreb and Prof. Patricia Franzreb hold UX and Usability seminars on demand.

- Human-centered design for interactive systems ISO 9241-210:2010

- Usability Testing Demystified

- The Encyclopedia of Human-Computer Interaction, 2nd Ed.

- Handbook of Usability Testing: How to Plan, Design, and Conduct Effective Tests

UX research - or as it’s sometimes called, design research - informs our work, improves our understanding, and validates our decisions in the design process. In this Complete Beginner's Guide, readers will get a head start on how to use design research techniques in their work, and improve experiences for all users.