This article was originally published on July 2, 2013. It was revised on July 25, 2017 to include updated recommendations.

Guerrilla usability testing is a powerful technique. Designer Martin Belam describes it as “the art of pouncing on lone people in cafes and public spaces, [then] quickly filming them whilst they use a website for a couple of minutes.” Let’s skip the pouncing part and instead focus on its subtleties, including how to obtain and share feedback with our team.

I recently worked on a quickstart project in which my team was asked to build a responsive website in a short amount of time. We were given very little time to code (let alone conduct research) for the endeavor, yet by employing guerrilla usability testing along the way we collected feedback on the brand position. Eventually, we aligned our designs to both customer expectations and business goals.

Once a week throughout the project, we tested different kinds of prototypes to bring the business’s ideas to life. For example, while mid-development, we sketched a mobile version of the site on index cards and did a quick assessment. This revealed navigational problems (which guided us to rethink a key point in the customer journey) and even ended up shaping a bit of the brand’s media material. What’s more, guerrilla usability testing opened our stakeholders’ eyes so that they challenged their own, innate assumptions about “the user.”

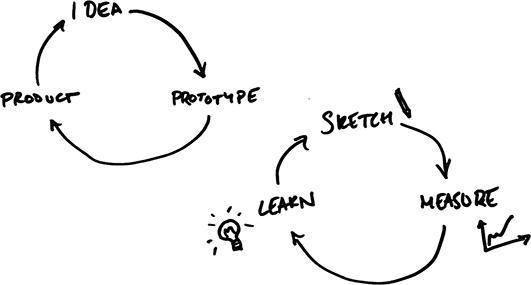

We iterated through our design ideas using lo-fi techniques like paper prototyping. Sketch by Chris Cheshire.

The bottom line? Guerrilla usability testing presented itself as an easy-to-perform technique for refining the user experience. It helped us validate (and invalidate) critical assumptions at cheap cost and with rapid speed.

Breaking it down

It’s hard to see the magic that guerrilla usability testing affords and not want in on the action, right? Here are some basic questions to consider before getting started:

- What shall we test?

- Where will we test?

- With whom will we test? and, of course,

- How will we test?

What shall we test?

One of the best parts about this kind of testing is that it can be done with almost anything, from concepts drawn on the back of napkins to fully functioning prototypes. Steve Krug recommends testing things earlier than we think we should and I agree – get out of the building as soon as possible.

Test what the product could be so as to shape what the product should be. Even loosely defined UI sketches can be a great way to evaluate a future product. In fact, recent research shows that lower-fidelity prototypes can be more valuable concerning both high and low-level user interactions.

Where do we test?

Where we conduct tests affects how we perform and document our work. For instance, if we’re testing a new mobile app for a retail chain, we might go to the store itself and walk the aisles; if we’re working on “general” office software, we might test it with coworkers in a different part of the office; etc. The point is: let context drive the work.

With whom do we test?

When designing for the consumer mass market, it’s easy enough to ask friendly looking strangers if they have a couple minutes to spare. Public spaces and shopping centers present some of the best places to do this on account of the sheer amount of foot traffic they receive (as well the relaxed nature of the environment). With more specific user sets, however, it’s useful to target subjects based on context (see above) and demographics. Nowadays you can recruit people for remote guerrilla usability testing by leveraging public forums such as Reddit, Quora, or LinkedIn Groups, among others. Try lurking on the forums to find the right people or writing a simple post outlining your intent and the related incentive.

Coffeeshops are great because you’ll often find test subjects from varying cultural backgrounds and different age ranges.

How do we test?

Testing is fairly straightforward: have participants talk aloud as they perform tasks. Use the think-aloud protocol to test overall product comprehension rather than basic task completion. The key is to watch customers fiddle with a product and silently evaluate its usability. As Sarah Harrison explains, “Observing users is like flossing–people know they’re supposed to do it every day, but they don’t. So just do it. It’s not a big deal.”

Always start with open-ended, non-leading questions like:

- What do you make of this?

- What would you do here?

- How would you do [that]?

By answering these kinds of questions, participants tell a loose story in which they explain how they perceive a product. Along the way, we can generate ideas for how to improve things in the next iteration.

Employing the technique

Guerrilla usability testing is very much about adapting to the situation. That said, here are some helpful hints that I find consistently work in different international contexts:

- Beware confirmation bias. The confirmation bias, also known as the myside bias, is the tendency to search for and favor information that confirms existing beliefs. While coffee shops and online forums are great places to find participants fast, spinning up a test at the most convenient location could just be a way for you to gather information selectively. This especially matters when these are emotionally charged scenarios, like the testing of designs. Understanding biases can help designers manage subjectivity and account for context.

- Explain what’s going on. Designers should be honest about who we are, why we’re testing, and what sort of feedback we’re looking to receive. Oftentimes, it’s best to do this with a release form, so that people are fully aware of the implications of their participation – like if it’s going to just be used internally versus shared globally at conferences. These sort of release forms, while tedious to carry around, help establish trust.

- Be ethical. Of course, being honest doesn’t mean we need to be fully transparent. Sometimes it’s useful to skip certain information, like if we worked on the product they’re testing. Alternatively, we might tell white lies about the purpose of a study. Just make sure to always tell the truth at the end of each session: trust is essential to successful collaboration.

- Make it casual. Lighten up tests by offering cups of coffee and/or an incentive in exchange for people’s time. Standing in line or ordering with a test subject is a great opportunity to ask questions about their lifestyle and get a better feel for how a test might go. If remote, you might offer a small Amazon gift card – also a good opportunity to ask about purchasing experiences.

- Be participatory. Break down barriers by getting people involved: ask them to draw – on a napkin or piece of notebook paper, for example – what they might expect to see on the third or fourth screen of a UI flow. This doesn’t have to be a full-blown user interface necessarily, just a rough concept of what’s in their head. You never know what you’ll learn by fostering imagination.

- Don’t lead participants. When you sense confusion, ask people what’s going through their head. Open them up by prodding, saying “I don’t know. What do you think?”. People in testing situations often can feel as though they are being tested (as opposed to the product itself), and therefore can start to apologise or shut down.

- Keep your eyes peeled. It’s important to encapsulate passing thoughts for later analysis. Ethnographic observation is one good way to capture what you were thinking of during tests. Don’t get too hung up about formalised notes though, most of the time your scribbles will work just fine. It’s about triggering memories, not showing it off at an academic conference.

- Capture the feedback. A key part of any testing process is capturing what we’ve learned. While the way in which we do this is definitely a personal choice, there are a few preferred tools available: apps like Silverback or UX Recorder collect screen activity along with a test subject’s facial reaction. Other researchers build their own mobile rigs. The important part to remember here is to use tools that fit your future sharing needs.

- Be a timecop. Remember, this isn’t a usability lab with paid users. Be mindful of how much time you spend with test subjects and always remind them that they can leave at any point during the test. The last thing you’d want is a grumpy user skewing your feedback.

Sharing the feedback

Conducting the tests is only half the battle, of course. To deliver compelling and relevant results from guerrilla usability tests, designers need to strategically decide how we’ll share our findings with our colleagues.

When analysing and preparing captured feedback, always consider your audience. The best feedback is the kind that understands stakeholders and kickstarts important conversations between them. For example, developers who need to evaluate bugs will have different needs than executives who want to prioritise new features.

Next, when delivering feedback, align it with your audience’s expectations. Try editing clips in iMovie or making slides in PowerPoint. Your co-workers are probably as busy as you, so an edited down “trailer” that highlights relevant results or a bullet-point summary along with powerful quotes is always a good method to keep people listening.

Go guerrilla

At the end of the day, guerrilla usability testing comes in many forms. There’s no perfection to the art. It is unashamedly and unapologetically impromptu. Consider making up your own approach as you go: learn by doing.

Note: Thanks to Andrew Maier for providing feedback on early drafts of this article and Gregg Bernstein for encouraging the updated sentences on remote guerrilla usability testing.

Related reading

- “Guerrilla testing” by UK’s Government Digital Services

- “Guerrilla Usability Testing” by Andy Budd

- “10 tips for ambush guerrilla user testing” by Martin Belam

- “Recording Mobile Usability Tests” by Jenn Downs

- “Failing Fast: Getting Projects Out of the Lab” by Tony Hillerson, Alan Lewis, Scott Green, Ryan Stewart, Randy Rieland

- “Rocket Surgery Made Easy” by Steve Krug

- “Talking Aloud is Not the Same as Thinking Aloud” by Mike Hughes