One of the most important tasks for any designer is to make their products and services as easy as possible to use. It doesn’t matter if the product or feature is very useful if the users struggle to use it.

When the iPhone stormed into the mobile phone market it didn’t really have features that others wouldn’t have. Quite the contrary – the first iPhone didn’t even have a 3G internet that was already pretty ubiquitous among competitors offering. The thing that made the iPhone as successful as it got was the very intuitive, responsive, and fun-to-use touch user interface.

App today are still using the same user interface components and gestures iPhone introduced in 2001. We touch, swipe, and type with a slow and cumbersome on-screen keyboard. This tells that the UI paradigm that Apple created was a good one, but like any, it’s nowhere perfect.

Cumbersome Touch Interaction Use Cases

As mobile apps and their usage are becoming more complex, some of the usual UI patterns are reaching their limits. Some of the common challenges with touch user interfaces include:

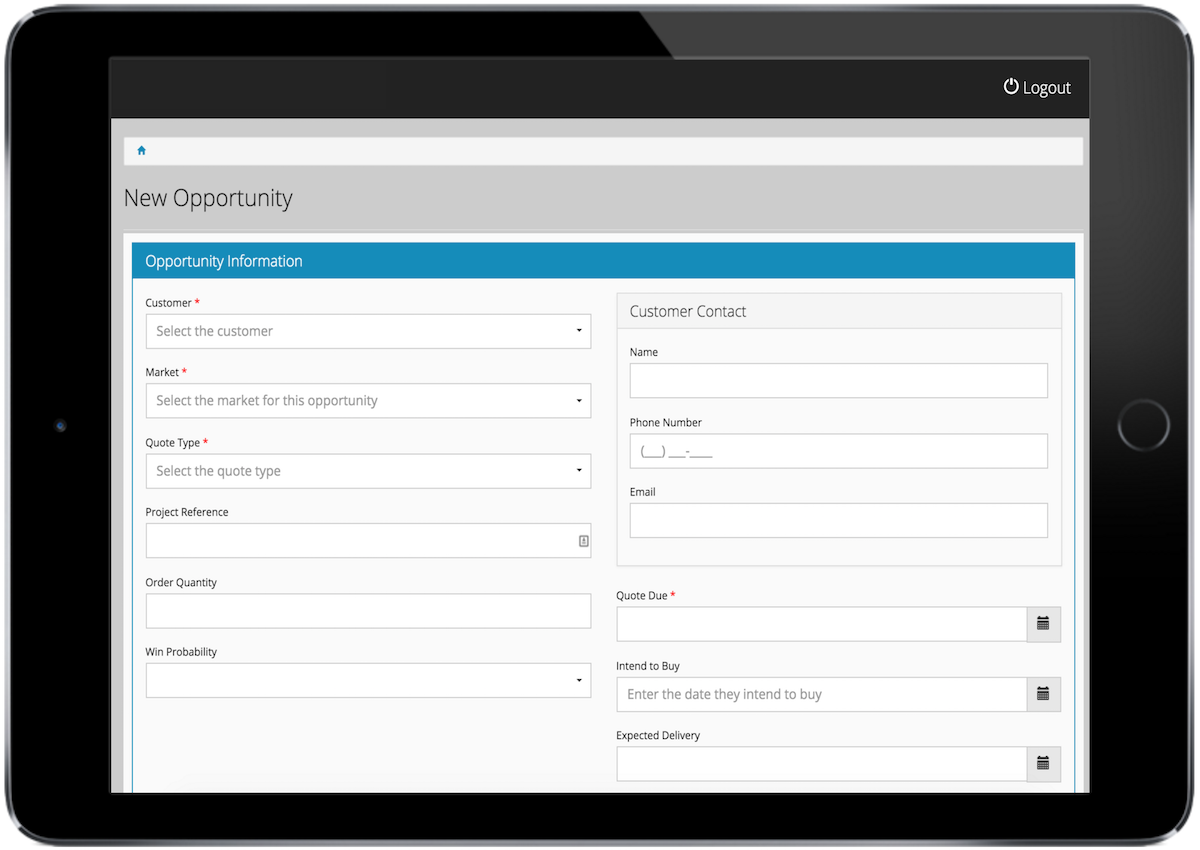

Filling complex forms

While typing in itself is at least bearable on a touch screen, filling a form that consists of date fields, selects, text, and numbers is most often at best doable.

When the user selects the first input, the on-screen keyboard blocks the next input. To select the second input field, the user needs to do all kinds of tricks to unfocus and scroll.

And even if the user is able to reach all the input fields easily, typing is error-prone, date pickers are not always the same, autocomplete works well, badly, or doesn’t work at all, etc.

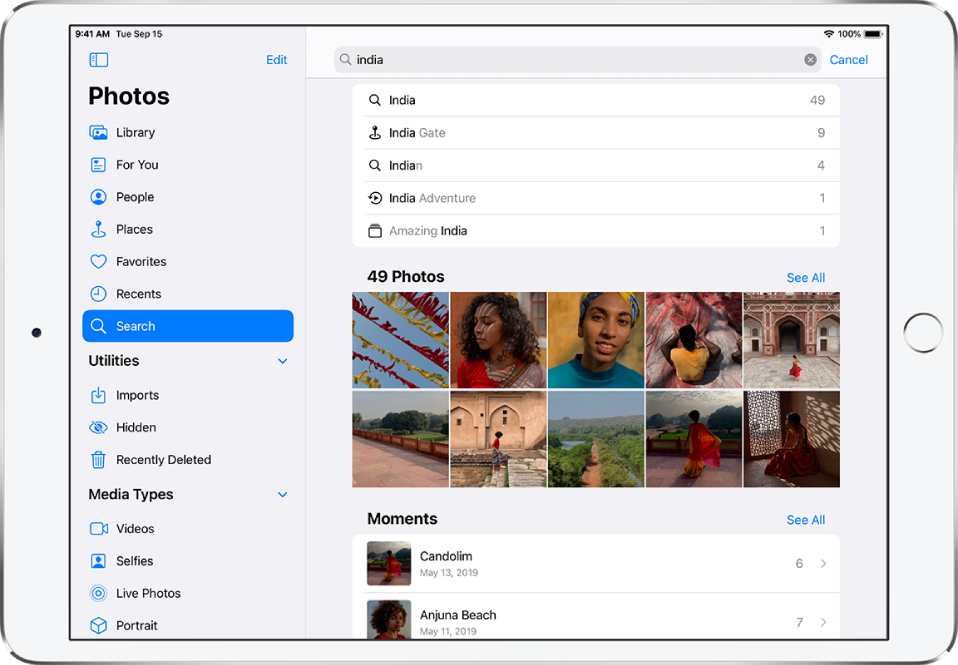

Searching for and adding items

Often the user needs to select many items from a bigger inventory of items. This is the case in many e-commerce platforms and many professional applications such as CRMs and it’s surprisingly common in all kinds of applications. This pattern is even used when sending an email to many recipients.

This too requires a combination of typing and selecting, which is cumbersome with a touch screen UI. First the user types something, typically the first few letters of an item and then selects these from a list. After the selection, they’ll need to get the focus back to text input and type again.

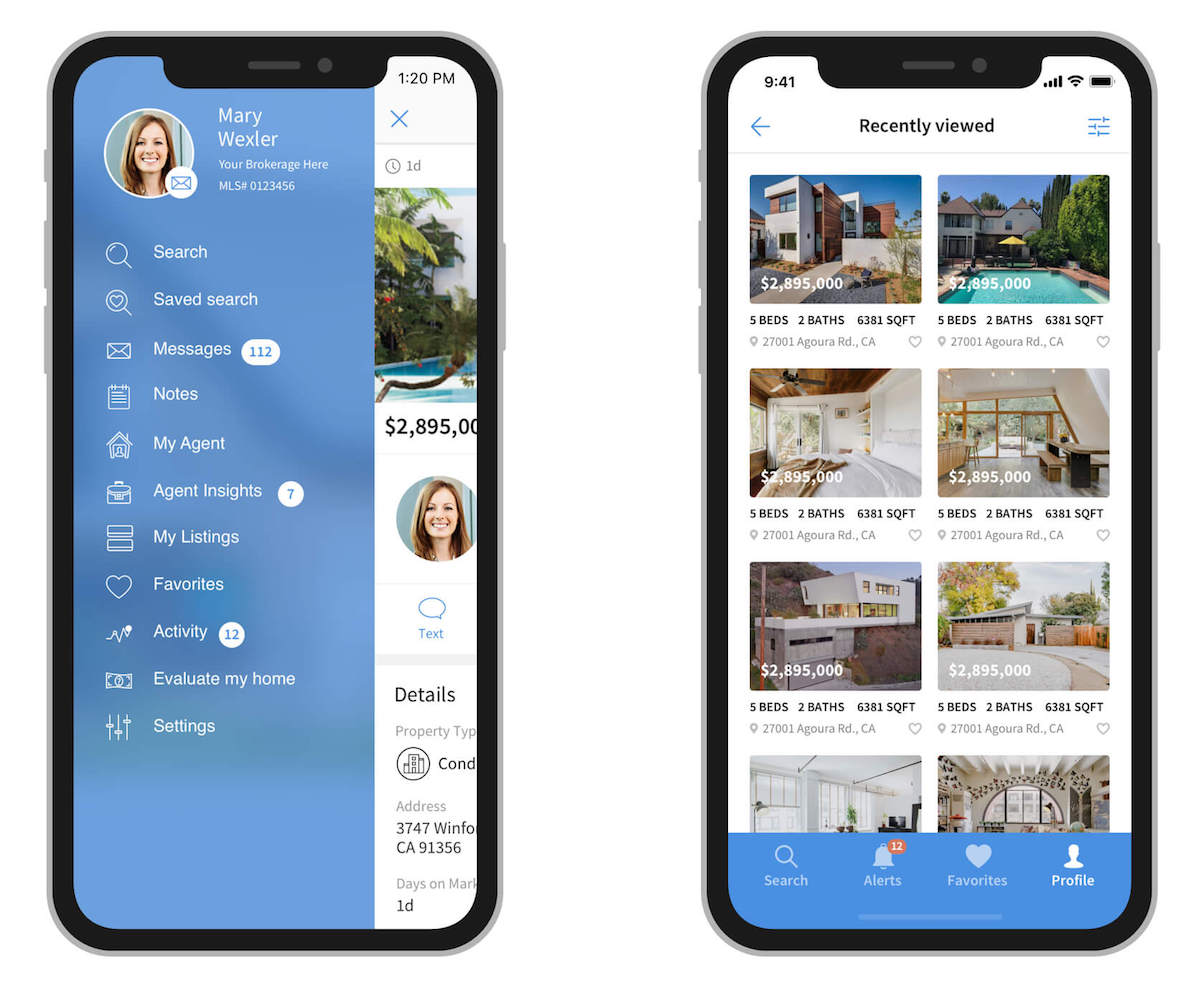

Deep navigation

Because a touch user interface requires a button for each feature and screen estate is limited, designers have to resort to using nested menus and navigation in which every item belongs to a category or set of items.

The problem is that the names of these categories and sets that subitems belong to are not evident. If you are looking to change your profile picture and you have to select between Profile and Settings. Which includes a profile picture? It’s impossible to tell without trying.

A related problem with a more complex app is that no matter how well you try to plan your hierarchies and information architecture, the more features you add, the harder it becomes to reach the last leaves.

Using Voice to Enhance the Touch Screen Experience

Voice is up to four times faster input channel as typing on a touch screen. And even if the speech recognition accuracy is never perfect, the error rate with typing is often higher, too.

But voice should not be seen as a replacement for the keyboard or vice versa. While saying “Turn off the lights” can be the most convenient way of turning off the lights, typing that to accomplish the same task would be pretty cumbersome.

Unlike typing, speech is the most natural means of communication for us humans. We learn to type but we have an innate need to talk. That’s why talking and writing are not at all the same, even if they can be used to express exactly the same things.

Voice user interfaces should hence not be thought of as a fast way to write but as a fast way to accomplish tasks. If you are looking for a certain product, say iPhone SE 2020 in red it can be convenient to type “iPhone SE 2020” to a search box, click the product that appears as the first result, and look for color options.

However, if you would ask for the same product from a clerk, you would not say “iPhone SE 2020”, but you could ask something like “Do you have the new cheaper iPhone in stock?”. Once the clerk answers, you could refine your request by saying “How about in red?”

Now a touch screen app enhanced with a voice user interface should be something in between. It should naturally enable the user to switch between typing, touching, and speaking. It should also support natural language. And most importantly, it should react in real-time for the user’s voice, not at the end of the utterance like with current voice assistants and smart speakers.

Voice Alone Isn’t The Complete Solution

Earlier we presented three common usability issues with touch screen user interfaces. Let’s consider how they could be improved by voice.

First, let’s think of filling more complex forms.

To book a flight, the user needs to give at least three different pieces of information: From, to, and the date of the flight. They might also give other information, such as the number of passengers and the class of the flight.

Here’s an example of a truly multimodal user interface showcasing a flight booking form supporting voice input:

Second, how about searching and adding items. On a traditional user touch user interface, this is a repetitive task that requires the user to switch between the on-screen keyboard and selecting.

Again, touchscreen has its benefits: modifying these selections can be done very simply, for instance, if the user has added 6 bananas but want to add one more, a tap of a button can do it. A demo of a better way to do it can be found here.

And third, deep navigation. This is a useful task where voice really shines and offers novel approaches to user experience.

Unlike touch user interfaces, voice doesn’t need a path that the user needs to follow in order to reach a certain screen. Voice enables the user to jump from any view to any other view in a natural language.

For instance, “change my profile picture” can work on all screens and jump the user directly to the correct view. And the best part is that the user can express this intent in many different ways, such as “where can I set my photo” or “settings for my profile image”.

Another unique feature of voice in navigation is that it gives the application owner valuable information on the naming conventions and features that the users would like to have in the app. If a lot of users mention features that don’t exist, this is a very good indicator that this kind of feature should be built. This is data that touch screen analytics can’t provide.

Integrating Voice Into Your Designs

When designing a website or a touch screen application, designers should start thinking of the best use cases for voice features. These can often be related to information-heavy data input and use tasks that happen typically when the user is on the go or when the users’ hands are otherwise tied.

Designers should stop thinking about only buttons and text but boldly think of the best ways to accomplish a task. User journeys should include situations where the user can’t or doesn’t want to type.

By adding a new tool – voice – to the design toolbox, designers can build better even user experiences.