Results are the reason we run any research, and surveys are no exception. Survey results can help to provide meaningful insights, shape product plans, prove or disprove hypotheses and expand on original ideas.

The purpose of surveys and unmoderated research is to gain knowledge off the back of insights. To get insights, you need accurate data; This isn’t always easy to come by. There is always the potential for bias to creep in and disrupt any meaningful results. By the time you have got your fingernails dirty by digging through reams of excel spreadsheets, it’s sometimes too late to spot bias, and this leads to invalid results and questions.

Bias at the Content and Design Stage

Considerations like branding a survey, showcasing prototypes, assumptive questions and interlink questions, question order, and social desirability bias impact the survey itself.

The basis of any survey includes content, design, and development focused on objective answers and clarity.

Survey design feels like the bias type that is most in our control. We can go through the survey, identifying bias in questions, removing unnecessary logos and mentions of brands, removing any assumptive language, and adding further options for specific questions. Just because this is in our control, doesn’t mean that things will always go swimmingly.

One point that is worth highlighting when it comes to analyzing results, surveys that focus on instant data (political and COVID surveys are good examples of this) can often lead to out-of-date responses. The news changes so quickly that often the data is out of date before the survey is complete. Then you’re in an awkward position of reporting on the news from two weeks ago trying to write a survey that predicts the future.

We can’t all do that! We are not all Simpson’s writers

Lisa Simpson as the future president and vice president Kamala Harris. Simpsons’ fans believe the writers predicted Kamala Harris’s inauguration outfit.

Bias at the Data Collection Stage

When finding participants, biases like sampling bias, demand characteristic bias, extreme responding bias and non-response bias, again, tamper with the data you get out.

Get the right audience to complete surveys at the right time, this way you will get better results to prove or disprove the original hypothesis. This is easier said than done with external pressures and non-vetted research panels. But if we were to develop a financial services app, we would want background on the participants’ previous financial experiences to ensure anyone taking part was the right “fit” for the product.

A lot of respondent bias comes down to a fact that is difficult to swallow for a lot of people. Getting the right people is more important than getting the most people.

We would all love 2,000 engaged respondents, but the likelihood is over 15% of participants will rush through surveys, clicking on extreme answers, and won’t be engaged in the survey itself. What is as important is that we recognize this and disqualify participants using rules that we can create in the analysis stage. A data management software with Name Matching features may help you extract data needed to identify which respondents to disqualify.

Some rules might be; to disqualify any participant that takes under 3 minutes to complete the survey, or to disqualify any participant that ticks “I strongly agree” to every answer. These might filter out the worst or the data and lead to less skewed results.

Bias in Data Analysis

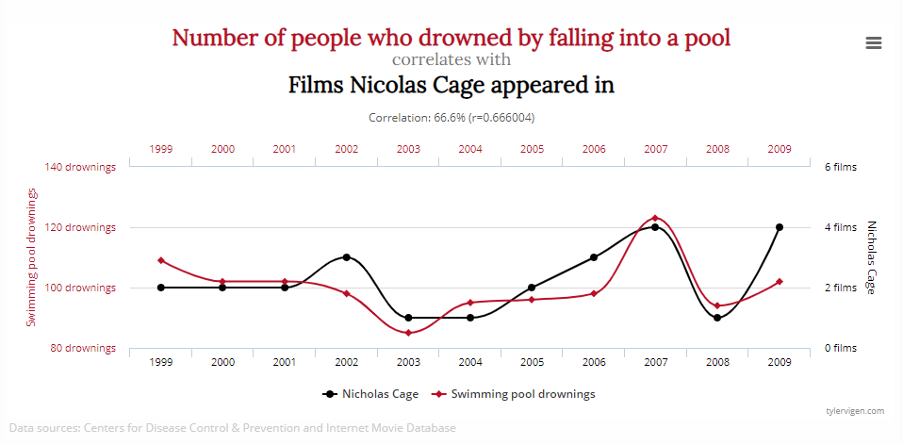

When we look at data, it’s important to remember we are not the participants. Correlation and causation examples, like the correlation between films Nicholas Cage appeared in and the number of people who drowned by falling into a pool, are not always accurate.

Graph showing the correlation between the number of people who drowned by falling into a pool with films Nicolas Cage appeared in. The correlation rate is 66.6%. Source: https://www.tylervigen.com/spurious-correlations

Instead, we can look at what the data is showing and not why the data is showing it. When you look at data for the first time, just take out the numbers. Don’t try reading into too much at once. Your brain will use different sides to calculate number skills and creativity. Focus on working one side at a time.

Estimating research accuracy

On to the juiciest point, we have covered how to eliminate inaccuracy with good design, exceptional participants, and touched on biases in the analysis stage, but now we’re looking at calculating errors in the research to determine how the results look when compared with population values.

Research accuracy should be reflective of the true population value plus or minus any errors. In short, a survey is accurate if it is close to the truth with repeated measurement.

The more repeat surveys we do to similar audiences, the closer we get to this perceived “True population”. But when was the last time we repeated a survey? That’s where we calculate what is called a confidence interval. The most common measurement of sampling error in surveys is the 95% confidence interval.

The simplest description of a 95% confidence interval is “If you repeat the same survey many times and measure the same indicator with the same methodology and same sample size, 95% of the results of these surveys will have confidence intervals which overlap the true value for this indicator in the population.” (Source: http://conflict.lshtm.ac.uk/page_47.htm).

To simplify, if you repeat the survey, your answers will be 95% accurate every time. Yes, this is an assumption, but with this range, we can calculate how likely the results are around the true population value. With confidence intervals we can calculate the precision and amount of sampling errors, giving us an idea of how close we are to true population value.

But alas, with good precision and a confidence interval we cannot say this is representative of true population value. There are still some elements we need to ensure, and it comes back around to bias.

The aim should always be to identify bias and minimize it, rather than assume an objective survey has eliminated bias.

Digging Your Nails into the Data

It’s too easy to look at data then read insights into each question, but before diving in there are a few things that need to be addressed.

Go back to objectives

With all the excitement of insights, it’s often easy to forget what the purposes of research are, this isn’t just our own perceptions, but often individuals that are involved in data analysis and research who aren’t stakeholders or involved in objective-setting will not be aware of the areas of focus of the research. Brief data analysts and revisit the objectives yourself to get the most out of the results and find the right insights.

Look at segmentation

Before analyzing data, look at the segments you want to break down and the number of participants in each split. This will tell you if the survey is ready to close or if you need to expand certain areas. Ultimately, if you’re running a survey on pet owners around the country, and you only have 4 Welsh dog owners, you might need to expand on that area before reporting on insights of all dog owners in the region!

Exclude bias and anomalies before analysis

“Yay! The survey has finished. Let’s get straight in and get those results as quickly as we can!”

I’m 100% on board with efficiency, but let’s stop for a second. How can we exclude bias before we draw insights from the surveys? The easiest way is to look at the time taken to complete the surveys, multiple IP addresses (if incentivizing surveys properly) to ensure there aren’t multiple individuals trying to apply, and filters in the data to exclude individuals who click through on the most extreme answers. This filtering and exclusion may only clear 10% of data, but it will mean insights and results are more accurate, 10% of 100 participants could swing the data by 9%, so be sure to look at this before reporting on insights.

Expand on quality data if appropriate

Some participants will intrigue you with their responses, particularly when dealing with data analysis on longer, open text questions. Sometimes participants go into a lot of depth in these answers, and it requires more context, feedback, and more questions. This is where booking 1:1 feedback sessions off the back of quant research come in, and where quant and qual can start to work together.

Go data first

Multi-tasking is hard. I can’t do it effectively. With data analysis, there are 3 stages. We have already covered all three in this piece!

- Cleaning the data – removing rogues responses

- Analyzing the data – Looking at numbers and percentages

- Getting the insight – comparing results with our objectives

These three data stages require different skillsets. Rather than going through, question-by-question, look to get all the data analysis sorted, then move on to the insights. The reason is these tasks take different levels of concentration, skills sets, and parts of the brain. It’s better to have focus and complete one task compared to completing one question.

Getting the right survey results and analysis comes back to two core elements. The data itself and aligning the insights with the initial project objectives.