In the early 1940s, U.S. Marine Major General William H. Rupertus penned the Rifleman’s Creed, memorized by every enlisted Marine to this day. Three lines seem to speak to more than merely rifles.

This is my rifle. There are many like it, but this one is mine.

My rifle is my best friend. It is my life. I must master it as I must master my life.

Without me, my rifle is useless. Without my rifle, I am useless.

For those of us who use and rely on technology as part of our daily lives, such a mantra isn’t so surprising. We use technologies such as the bicycle or automobile to commute to work, e-mail and social media to stay in touch with each other, or Untappd and Yelp! to track down after-work drinks and dining. According to some commentators, this reliance on technology has made people impersonal and out-of-touch with each other. For others, technologies merely represent an extension of our own human agency. They are tools that allow us to extend our influence on ourselves, each other, and the world around us. And for others still, technologies introduce a type of heightened social interaction that is actually more personal than we could ever achieve face-to-face, as social rules and constraints are reduced or eliminated.

Just as technologies are treated as friends, so too are our interfaces. In fact, interfaces that are more natural and human-like are likely to be easier for new users to adopt since we may lean toward treating them just like humans. Apple’s Siri is a great companion to have when “she” is able to track down lost phone numbers and directions to the latest hip charcuterie, but at what point does our relationship with Siri transfer from data manager to personal assistant? More broadly, what encourages some users to shift from more functional to more social relationships with their technologies? How can we use this knowledge in our design practice?

Although we’re not suggesting that every interface should be programmed with a social relationship in mind, the objective for many UX designs is to engage the end user on a consistent, sustainable, and personable level. To this end, we offer a discussion of what it means to be “social” with an interface, and provide a deeper understanding of the pros and cons of designing with social relationships in mind.

What It Means To Be Social

In a social relationship, two people influence each other in some way, and this influence is seen as positive or negative by those people. This is a simple and useful definition, but as professor and researcher Jaime Banks (one of the coauthors of this piece) has argued in her own research, its focus on “people” leaves out the potential for humans to have social connections with their technologies. However, if we replace “people” with “agents” in our definition, the potential for the human-technology social relationship comes into focus. After all, agents are things that actively influence a situation or produce a specific effect. Technologies can have many of the same influences that people do.

The idea of a human-technology social relationship might seem odd. It’s very tempting to think about people as using technology to do their bidding, as technologies are seen as tools to make certain processes easier. However, emerging research suggests that human-technology relationships might actually be a bit more complicated.

One reason for this complication is that technologies do in fact exert agency on their users. They might not do this actively (most of us don’t yet live in homes that talk to and fall in love with us), but they might do so passively, by affording certain actions and constraining others.

Psychologist James Greeno wrote about affordances as being those characteristics of a technology that enable certain actions, while constraints hinder actions. Let’s consider three different interaction “archetypes” of our own creation, archetypes of interfaces that might behave like social agent, each differing in their constraints and affordances.

The Gatekeeper

The Gatekeeper keeps our personal data safe and secure. A financial institution site requires login credentials—a username, password, and third-factor authenticator—that afford the user secure access to their banking information, but may constrain their ability to see a balance if they forget their password. This set of affordances and constraints is much like a human doorman that may (dis)allow access to a building based on an ID badge.

The Editor

The Editor keeps our language clear and proper. A smartphone is set to auto-correct words based on U.S. English spellings, which affords the user a decent amount of protection against unsightly typos but also may constrain the easy typing of unconventional words or British English spellings. These interface functions mirror the ways that humans may misunderstand intended communications, and instead offer their own meaning (think toneless text exchanges).

The Vendor

The Vendor lets us sample their goods, but leaves us begging for more. An app is marketed as a sophisticated drawing program, but has adopted a freemium model. It affords users the potential to create complex images with rich colors and textures, but constrains their ability to actually do so by requiring payment for the more advanced drawing tools. This type of control over potential resources can be seen as similar to paying a shopkeeper for tools or an instructor for teaching skills.

Who’s in Charge?

When humans and technology come together in a computing experience—such is the case in the three archetype examples above (and there are probably many more archetypes we could come up with)—in what ways are the human and the technology in charge of what actually happens in that experience? How do users feel about this balance of power? The degree to which an interface interaction feels like interacting with another person strongly depends on the user’s feeling about said power balance.

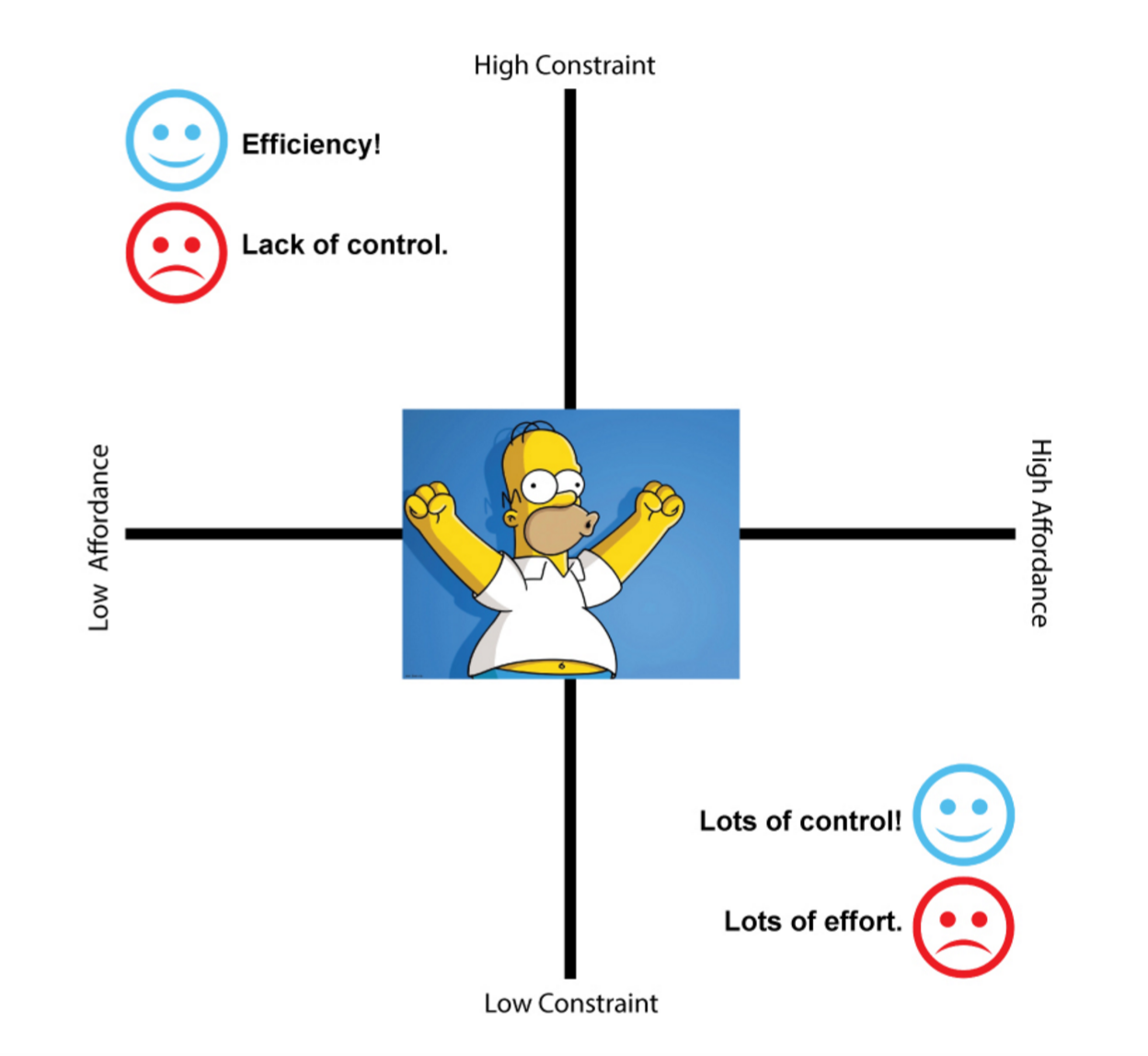

High affordances and low constraints may make a user feel more in charge, but also more burdened by computing tasks. High constraints and low affordances may result in feeling more efficient or able to focus on other tasks, but also more subject to the interface’s demands. A “Goldilocks” balance of affordances and constraints may lead users to feel like they are in a partnership with their technologies: not too in charge, but also not too controlled.

User interfaces should be designed with a “Goldilocks” balance of affordances (options they provide the user) and constraints (limitations placed on the user) for an optimal experience.

In this article, we’ve established the logic for our assumptions that the manner in which an interface is designed might trigger varying levels of social interaction with that interface. In an upcoming article, we’ll draw on our own research in video games, such as 먹튀폴리스, to talk about the complexity of those relationships (ranging from asocial and function to fully social and personal), ways in which we can design to encourage these different types of relationships, and the cost and benefit of each one.

For those looking for further reading, check out:

- Jaime Banks’s article on player-game relationships (a nice teaser for our next article!)

- Sherry Turkle’s TED talk on humans, connections, and technologies

- My (Nick’s) article on the uncanny valley