Every day, at the street by my house, I press the “walk” button, and I wait. Each time, as soon as the traffic cycle is complete, I get the walk signal. On a whim, one day I didn’t press the button… but at the end of the traffic cycle, I got the walk signal. The button I press every day has absolutely no impact on when the light will change. Is it still worth having the button?

Buttons that do nothing are known as placebo buttons, and they exist everywhere: at crosswalks, in subway stations, and on thermostats. They serve no function, other than to provide the user with the perception that he or she has some control over an automated system.

It sounds crazy: why would it ever be better to pretend a user can impact something he or she actually can’t? Why lie to the user in these situations? Interestingly enough, it turns out users want to feel in control. Consider the following three scenarios, all three involving an online application form to perform in a local talent show.

In Scenario A, the user opens the form, and the cursor automatically moves to the first field: Name. When he has finished typing his name, the cursor moves on to Date of Birth, and then Address. Farther down the form, the questions get more difficult, asking for long-form explanations. The user isn’t sure how he wants to phrase his response to “Reason for Applying,” so he tries to skip down to the next field. Unfortunately, the form has been hard-coded to prevent him from skipping fields, and he is unable to leave “Reason for Applying” until he has typed something into the field.

In Scenario B, the user opens the form, and the cursor again begins at the first field: Name. However, in this situation the user has full control, can fill out as many forms as he wants, and can submit the form at any time – with or without completing the fields. Incomplete fields will make the user less likely to be selected for the talent show, but it’s entirely up to him whether or not to complete the fields.

In Scenario C, the user opens the form, and is greeted by the perception of complete control: he can skip between fields at will. However, when he clicks “submit,” he will be greeted with an error message, reminding him that all fields must be filled in, in order to complete his application.

Most of us prefer Scenario C, which provides us the flexibility to move at our own pace and in the order we choose, while also ensuring we fill out all the fields that will impact our acceptance into the proverbial talent show. In other words, we want both the safety of automation and the sense of control. In this article, we’ll discuss why we feel the need for control, how to create that feeling, and whether the creation of placebo buttons is an ethical UX choice.

The need for control

The human need for control can be traced back to our earliest roots. In psychologist Abraham Maslow’s well known hierarchy of needs, he identifies our most basic needs as the physiological ones: health, food, and sleep. All of these require a significant amount of control—control over our environment in order to gather food, and control over our own choices to avoid disease.

It’s therefore unsurprising that we seek out control in everything from our relationships—everyone has “put their foot down” at some point or another—to the color of our living room walls—even though the old color was fine, it wasn’t perfect. It’s ingrained in us, and it gives us a sense of comfort and well being.

This sense of control is very closely linked to what psychologists call an “internal locus of control,” or the belief that our actions have the power to impact and change a given situation. (Conversely, an “external locus of control” is the belief that we are at the mercy of external sources.) People with an internal locus of control are more likely to be confident, take better care of themselves, and have lower levels of stress.

As UX designers, we try to ensure users will have positive experiences when using our products or accessing our services. This often translates into empowering users, thus giving them the tools to find their internal locus of control. In other words, we manipulate them into thinking they have been empowered. Consider this example from Nadine Kintscher, a UX consultant at Sitback Solutions:

Today, you can adjust your screens brightness, disable notifications (without having to turn the phone on silent), and decide whether your phone should connect to both the data and phone network or not… Even if all these adjustments may only extend your phone’s battery by a few minutes, it gives you that satisfying warm and fuzzy feeling of accomplishment. YOU are in charge and can change these settings.

-Nadine Kintscher, User control and cognitive overload, how UX methods can help

From Kintscher’s perspective, the perception of control is more important than the user’s actual ability to control the phone’s battery life.

Perception and Deception

UX designers have long made the case for deceiving the user. Everyone from Instagram to Microsoft has used deception as a tool in improving the user experience. Don’t believe me? See if either of these examples feel familiar.

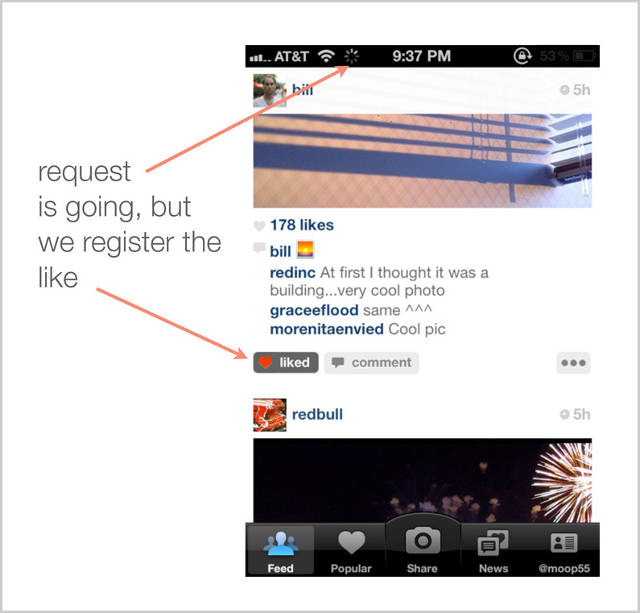

Instagram “always pretends to work”

When Instagram is deprived of a data or wifi connection, of course it can’t connect and pull down fresh images or transmit up the latest “like.” That doesn’t stop the program from appearing to be fully connected. The Instagram app is designed to function even without a connection, storing every action locally until the data or wifi connection is reestablished.

For the user, this is technically a lie—no, when she pressed “like,” the system did not upload it and her friend did not get to see it. But it’s only a little white lie; as soon as the app is back online it will dutifully pass along the “like.”

Progress bars mean nothing

Whether downloading content on a Mac or a PC, we’ve all experienced a progress bar at some point. This handy little liar keeps us informed with a “one minute left” notification that lasts a solid three minutes. Obviously, anyone who stops to think about it can recognize the discrepancy between the amount of time stated and the amount of time we wait, but the lying progress bar continues unabated.

A few years ago, Popular Mechanics wrote an article explaining why it is that the progress bar lies. Essentially, author John Herrman said, download speeds are inconsistent, and as a result there is no way to know how long a download might take. Thus, the purpose of the progress bar is merely to make the time feel shorter.

Using principles from the nascent field of psychology called time design, [Chris Harrison, a Ph.D. candidate at Carnegie Mellon] has figured out a way to make a progress bar feel like it’s moving faster than it actually is. After testing different movement patterns… Harrison arrived at the fastest-feeling, albeit fundamentally dishonest, progress bar: one that speeds up as it progresses, and contains a backward-moving ripple animation.

-John Herrman, Popular Mechanics

Levels and methods of deception

Obviously “but everyone does it” is not an effective rationale for lying, deceiving, or manipulating users. We live in the Information Age, and knowledge is power. Anyone seeking proof of our collective desire for knowledge need look no further than the daily articles that lambast Facebook, Google, Android, and Amazon for hiding (or not actively sharing) information we feel that the public deserves to know. The information they “hide” ranges from obscure details about our privacy settings, to potentially critical explanations of who owns our data and who might be able to track our locations. We want to know the truth, so that we can make our own decisions.

Yet, to paraphrase “A Few Good Men,” can we handle the truth? When users are faced with all possible information and infinite options, decision paralysis sets in, and they find themselves unable to make a decision or focus on the task at hand. Information without context is therefore not particularly valuable, and returning to our more mild initial example, waiting for the light to turn with no control over the situation can cause frustration and a poor experience. Imagine if the people working in the office with a “placebo” thermostat one day found out they had been lied to. It would certainly upset their locus of control, and significantly increase stress!

It’s a very difficult balance. Still, without outright lying to users, we may choose to control their experience in a variety of ways. Whether or not this deception is ethically proper is for each individual designer to consider, for each individual scenario. Let’s review a few ways we might control experiences without blatantly misconstruing facts.

Encouraging flexibility

In our first example, we looked at three variations of a form. In the third variation, users enjoyed the flexibility and control of filling out the form fields at will. The functionality never forced the user to be aware that all fields were required, and yet users were also never lied to. This flexibility can exist in other situations as well. Allowing online learners to choose their own path to course completion, for example, or providing more than one way to accomplish a goal (such as incorporating a call-to-action button as well as a link elsewhere on the page) adds flexibility while maintaining control.

Control over the inconsequential

Any older sibling will well remember these words: “just let your little sister/brother make a decision you don’t care about!” The same holds true for users. It’s the reason banks allow us to choose profile pictures, and credit cards ask us to customize our card photos (for free!). Far from feeling trivial, these small decisions give us ownership and control over things which ultimately distract us from the fact that we have no say in the decisions that matter.

Skeuomorphism

This may seem an odd inclusion, but skeuomorphism, or the use of “real world” images to represent things in the digital world, is another way of impacting user perception. Skype, for example, uses a fake “static” noise at times, because users associate silence with a call being dropped. Ultimately, it might be useful for people to learn that digital phone calls do have silence between sentences, a thing that analog phones never did because the technology was not sufficient to avoid the static. But the unnecessary “static”—a mild deception—makes the users happy, and stops them from worrying that their call was lost.

Ethics in UX

The ethics around placebo UX are complex. Placebos are hotly contested in the medical realm; New York Times opinionator Olivia Judson once pointed out that “since deception of patients is unethical, some argue that the placebo has no place in the actual practice of medicine.” In the UX realm, they have nearly no chance of doing actual physical harm, and at times they are barely even a deception. The “refresh” button on many sites does nothing, as the site is automatically-updating anyhow, but it does (technically) also provide a refreshed view of the site. Uber, a taxi-alternative institution is based on the premise that users would prefer to track (and thus feel some measure of control over) where their ride is coming from, though the phone app does nothing to actually reduce wait times.

All we can do is continue to ask questions. For every potentially deceptive interaction, test with real users to see how they react. Ask the actual users how they feel about having, or not having, the information at hand. In addition, consider three basic questions:

- How does this information help the user?

- How does this “deception” help the user?

- How would the user feel if he/she knew all of the information I have?

As designers we have taken on the responsibility of determining when users need control, when they need safety, and when they need the illusion of control. So long as we keep the goal of helping users at the heart of every decision, we are likely to maintain our ethics and make decisions with confidence.