The debate was about the best color for the toolbar on the webpage. The design team was fond of a particular shade of blue while the product manager was advocating for a greener hue. Both parties had strong opinions about their choice. Who gets to decide? Was the choice right? And does it really matter anyway?

Decisions like this are often made based on diplomacy, authority, or opinion. The debate recounted above is an often retold tale from Google, and the story has endured because the team eventually tested 41 gradations of blue to see which users preferred. Why? It’s about more than usability or user experience. Whether or not a design choice leads to clicks can have an impact on a revenue stream. Companies like Google know the importance of conducting experimentations like A/B testing to determine the right approach with data—not an opinion or a guess.

Whether the goal is to improve a landing page or a call-to-action button, A/B testing is the best way to help UX teams and marketers make incremental changes over time. A well-designed A/B test will help the team decide between two buttons, two fonts, or even two microsites. A/B tests tell us what’s not working, and what is successful, rather than merely what has the potential for success. In short, the results from A/B tests can lead to informed decisions based on data, and not just opinions.

So what holds some people back from doing it? Misconceptions about the complexity, fear of number crunching, or not understanding the testing possibilities can be roadblocks to informative experimentation. In this article, I’ll demystify A/B testing and provide basic steps for getting started with simple tests.

A/B Testing: A Basic Definition

A/B testing is an experiment. Sometimes called split testing, it is a method for comparing two versions of something to determine which one is more successful. To identify which version a design approach is better, two versions are created at the same time, each version shown to half of the same target audience. The test measures which one performed better with the target audience. The version that prompts the most users take the desired action, or the better conversion rate, is the winner.

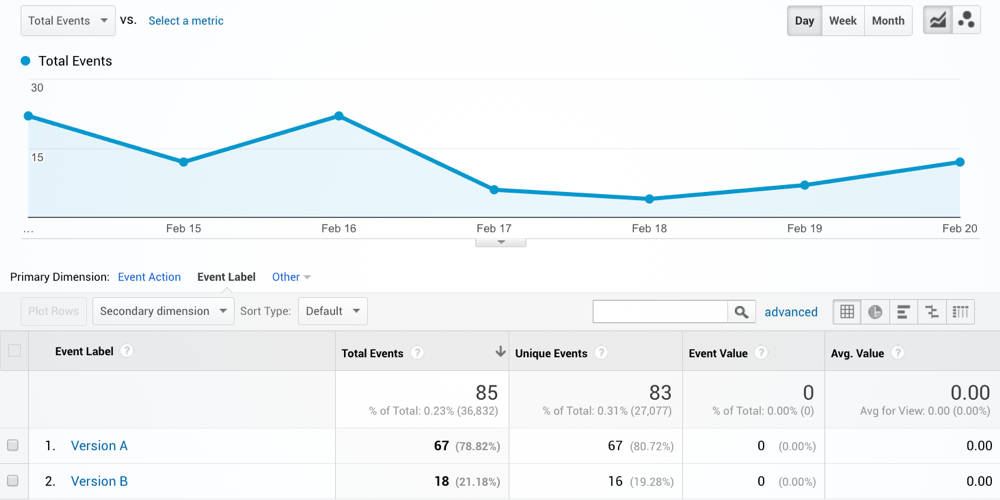

In A/B testing there must be at least two versions of the item to be tested: version A and B. For example, we could test the design of a webpage or a single screen in a mobile application. Half of the traffic is shown one version (A) and half is shown the modified version (B). The different versions are shown to users at random. Each user’s response is recorded in an analytics or testing tool so it can be measured. Once the test is complete, statistical analysis is used to assess the results (Don’t panic! Help is available.). The experiment may show that the change had a positive or negative impact or no impact at all.

Example of an A/B Test being measured using Google Analytics Events.

Why Conduct A/B Testing?

Have you ever seen a new design idea, and been filled with doubts and uncertainty? Will it work? Will the change make the site better or worse? A/B testing can help remove some of the guesswork. Rather than wonder, teams can stop saying “we think” and start saying, “we know.” A/B testing can help:

- Make informed decisions

- Confirm a new design is going in the right direction

- Decide which version of different approaches to implement

- Figure out what is working best among specific UI or copy elements

- Learn how small changes can influence user behavior

- Constantly iterate a design

- Improve user experience over time

- Optimize conversion rates

Ultimately, the advantages of A/B testing offset the time it takes to implement. More conversions, whether it’s more subscriptions to email newsletters or new leads, ultimately impacts the success of an initiative and can help show an ROI. Knowing something is performing well makes it easier to justify continuing with it.

The 5-Step Plan to A/B Testing

There isn’t one right answer for deciding what to test. Test things that will have a high impact and likelihood to make an improvement on the project by having more data about user’s behaviors. Review analytics for low conversion rates, high bounce rates, high traffic areas (to get results more quickly), or abandon points in whatever process or action you want users to complete. Knowing the important metrics for a campaign, program, or the business is the key to focusing on a problem that A/B testing might solve. This leads right into step one.

1.Identify a goal

Whether the test is with an ad, web page or email, all A/B tests need a clear goal. The goal could be what you want to know or how a change might make something better. What are the metrics or conversions you trying to improve? To help, here are some goals tied to projects:

| Project Goal | What to A/B Test |

|---|---|

| Increase email open rate | Which email subject line gets more opens? |

| Improve banner ad click-through-rate | Which copy gets more clicks? |

| Increase e-book downloads | Will changing the button text gets more downloads? |

| Get more shares | What size social buttons generate the most shares? |

| Generate more leads | Which offer text generates more form submissions? |

Think about the results you want and determine metrics related to the goal. Look at current performance data to establish a baseline and benchmark for improvement.

2. Form a hypothesis

An A/B hypothesis is an assumption on which to base the test. What is the reason one version will perform better than the other? To help formulate the hypothesis, ask a question and predict the answer to what the the barrier to conversion is. “I think that changing X into Y will have Z impact.” The hypothesis should include what you want to change and what you guess will happen as a result of the change.

A few examples:

Why aren’t users downloading the E-Book?

“I think that changing the call-to-action button text from “Download” to “Free E-Book” will increase the number of downloads.

Why are users abandoning the shopping cart?

“I think that reducing the number of steps from six to three will increase the number of check-outs.”

Why isn’t anyone completing the sign-up form?

“I think that reducing the form length by eliminating fields will increase the number of completions”

Take an educated guess at what could be preventing the approach from being successful and what could change that.

3. Design and run the test

Create the two versions to test, A and B. Pick only one variable to change. Changing multiple things is possible, but it is known as multi-variant testing, which increases the complexity of the test. Novices should start simple. Almost anything can be tested, but pick something relatively easy to change that is likely to have a large impact.

Here are some examples on simple variables to test:

Headlines and Subheads

Text length, nuances in language, clarity, and attitude can impact how users respond to copy. Try testing:

- Voice and tone

- Length

- Statements versus questions

- Trigger words

- Positive versus negative messages

Calls to action

Calls to action are keys to success, so how can changes make people download, share, sign-up or buy more? Experiment with:

- Text or phrasing on buttons

- Varying the size, color, or shapes of buttons

- Using text alone or icons with text

- Hyperlinks against buttons to see which gets more clicks and taps

- Button placement or CTA on the page

- Changing the offer itself

Images or Graphics

Images can help establish a connection with a user or drive them further away. Try testing:

- Use of photography vs. illustration for a main image

- Variations of people images: gender, race, age, individuals or groups

- People versus product images

- Fluffy kittens or cuddly puppies?

Forms

Forms may be necessary but they don’t have to be painful for users. Tweaks to make things easier, especially for users on mobile devices, can have a big impact. Try testing:

- Form length

- Form fields

- Form labels and placement

- Required fields

Remember, only change one variable at a time so the change and the impact are clear. Bigger changes lead to bigger results.

4. Analyze the results

Monitor the test (Check out the tools link below for options.) to make sure things are running correctly, but don’t look at the results until the test is complete. It may be tempting to call a winner too soon. The test should run long enough to have meaningful results that are statistically significant. This means having enough data to confidently make changes based on the results. It can take from a week to two months depending on the sample size needed. The more information collected, the more stable the results will be.

Once you set a sample size, stick to it. Figure out the minimum amount of responses needed to get statistically significant results; the aim is to be able to say with enough certainty that the change caused the outcome. Don’t abandon the test too soon because you think you have a winner. What until you know the winner is a real winner.

5. Implement the results

Implement the clear winner—but continue to test. A/B testing is about incremental improvements, and data from additional tests will help with continuous improvement. The more you test, the more you know.

Don’t guess. Test!

Having evidence to back up a change or direction makes it easier to make good decisions. A/B testing can have an impact on the bottom line by understanding what works and what doesn’t. After conducting a few tests and seeing how one version can perform significantly better than another, no one will question why a team wants to conduct A/B testing—just why they weren’t doing it all along.

Resources for Beginners and Beyond

Beginner’s Guides

- ABCs of AB testing, Marketing Land

- Ultimate Guide to A/B Testing, Smashing Magazine

- A Beginner’s Guide to A/B Testing, Kissmetrics

- Newbies Guide to Testing, Infographic from Marketing Profs

Extra Credit

- Critical Difference Between A/B and Multivariate Testing, Hubspot

- Crash Course on A/B Testing Statistics, Conversion XL

- Conversions and Statistical Confidence, Kissmetrics

Calculators

- Sample Size, Online Calculator

- A/B Split Test Significance, Online Calculator

- Excel Sheet with A/B Testing Formulas, Excel Spreadsheet

A/B Testing Tools

- Ultimate Comparison of A/B testing software, Conversion Rate Experts

- A/B testing tools to help improve conversions, Mashable’s Top 10

A/B Testing Case Studies

- 100 Conversion Optimization Case Studies, Kissmetric’s Curation

- A/B Testing Case Studies , Optimize’s Library

- Surprising A/B Test Results to Stop You Making Assumptions, Unbounce

UX research - or as it’s sometimes called, design research - informs our work, improves our understanding, and validates our decisions in the design process. In this Complete Beginner's Guide, readers will get a head start on how to use design research techniques in their work, and improve experiences for all users.