Any UX practitioners watching the HBO series Silicon Valley may have been screaming at their TVs during season 3’s final weeks. The fictional consumer-facing compression platform Pied Piper launched to dismal daily active user numbers, and viewers saw a perfect illustration of what can happen when no user research or testing is involved in a product development process.

If Pied Piper had wanted to do research, they would’ve had it relatively easy; user research can sometimes be done as easily as grabbing some people in a coffee shop. And while products that have seemingly unlimited resources (e.g. the Facebooks and Googles of the world) can dedicate entire departments to constant and rigorous testing, others face more internal barriers. Time, money, and human resources are often at the forefront. Not to mention the struggle to gain internal buy-in.

That said, all products stand to gain a great deal of insight through user testing, no matter how lean or robust it is. Recently, I found myself in a position where testing was necessary, but faced many hurdles. In my situation, we had an added bonus layer of complexity: customer relationship managers who fiercely protect the way we interact with our existing customers.

I work for a business-to-business (B2B) company called JW Player. We specialize in supporting enterprises and developers in online video by helping them reach viewers, monetize video, and grow their audiences. As JW player has transitioned to a software-as-a-service business over the past couple of years, we have grown immensely and expanded into new product lines. To make sure we were making great choices for our customers, we embarked on a journey of testing with our customers and users.

In this article, I’ll walk through how we chose our methods, identified constraints, set our goals, and executed the plan.

Choosing a testing method

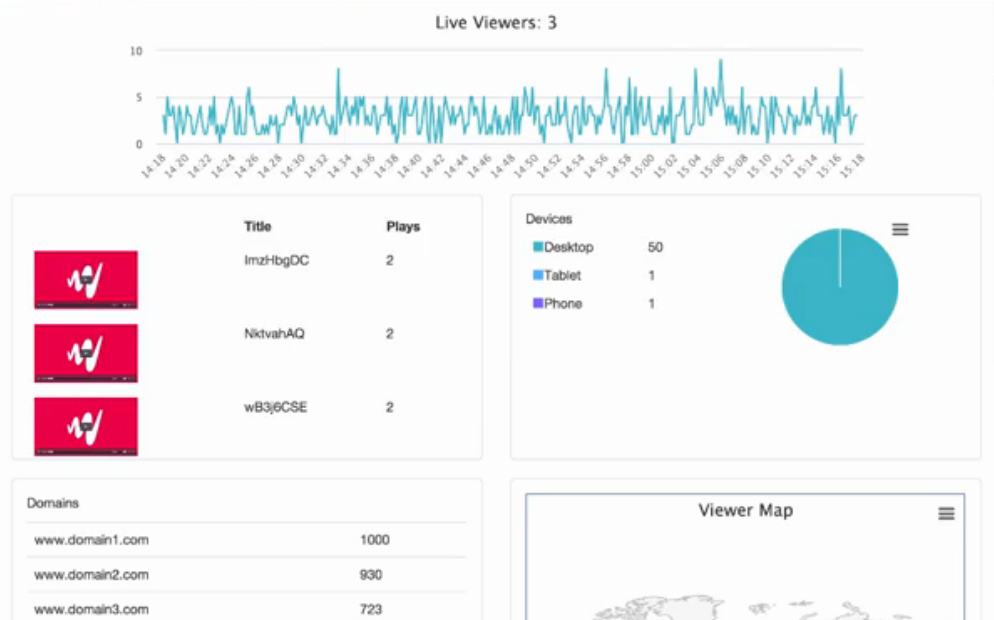

When JW Player first established a new user research process (with a one-person UX team guiding the way!), we learned which customer types we were interested in speaking with, and located the correct people within each customer organization to speak with. But then we found ourselves with the opportunity to do an early test of a new real-time analytics tool. The tool is for measuring video traffic and performance across devices and geolocations, and we wanted to learn a few things about the product, including:

- Which metrics do users find most valuable?

- Which user types are most likely to use this tool?

- Does what we display in the tool match what users interpret?

- Internal manageability: All the work is online, so everything is shareable and communication is easier between the many stakeholders (who could be on different teams).

- External manageability: Scheduling is simpler when travel isn’t involved, and it’s an easier ask for users to share 30 minutes from the comfort of their own office, than to come to ours. Plus, JW Player customers are all over the world! This is a great way to include them all.

- Flexibility: In B2B organizations, resources aren’t always there for onsite testing, and remote testing can be done leanly and with low overhead.

- What are we investigating or testing?

- Which users are we targeting

- What will we say to them?

- Who on our team needs to be looped in?

- Laptop with camera and microphone

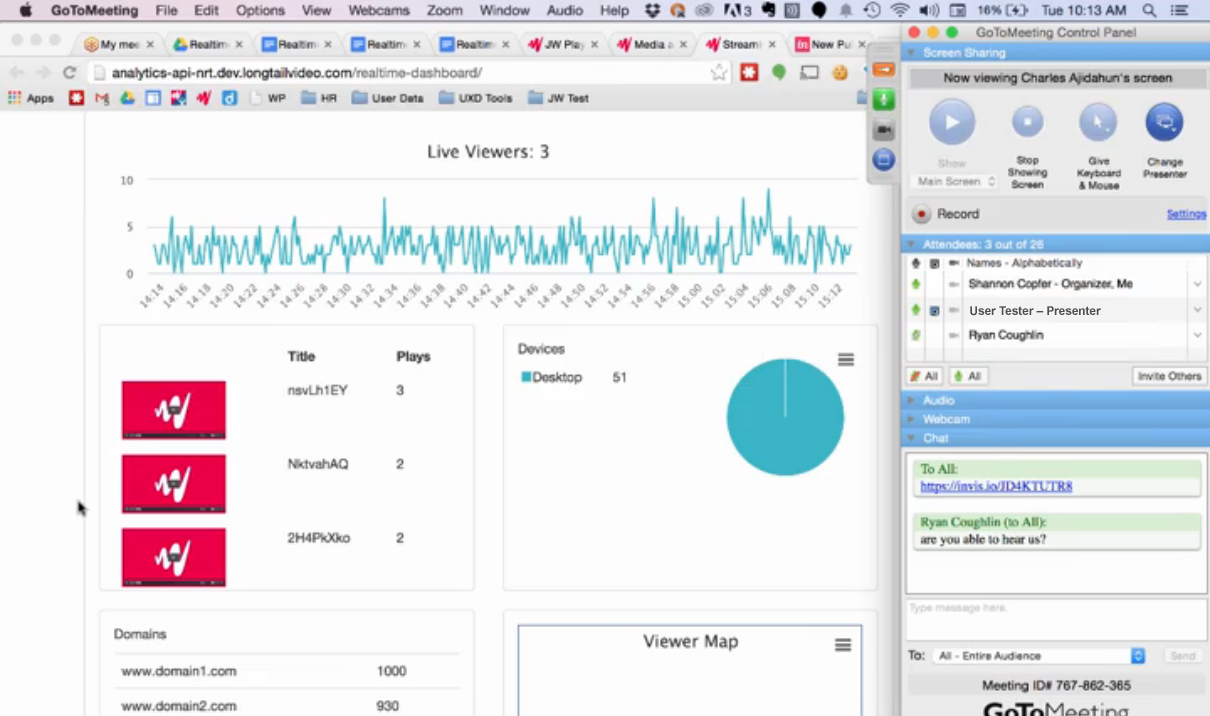

- GotoMeeting for group calls and screensharing

- Camtasia for screen- and sound-recording

- The JW Player Dashboard for storing, organizing, and sharing session recordings

- Everything else Google:

- Gmail for outreach and setup

- Calendar for sending session invitations

- Sheets plugin for mail merges

- Drive for note taking and sharing, and tracking user conversations

- Where are the users located?

- Is the customer’s account in good standing?

- Is there a travel budget?

- What questions need to be answered, and by when?

Depending on the timing in the development process and where a team’s knowledge gaps are, there are a lot of options for conducting user research, which have been detailed and explored and explained by many others. We didn’t have a ton of time, and we certainly didn’t have budget. That ruled out travelling to other offices or setting up an in-house testing studio and scheduling sessions. We also had specific questions about our low-fidelity prototype, so we knew that a moderated test would be sufficient to get the answers we needed.

We decided that remote moderated testing of the prototype would work quite nicely, because it offers:

By remotely testing our moderated prototype, we’d be able to track the sessions via screen recording for easy sharing afterwards. Additionally, multiple team members could attend from different locations, and we could conduct several sessions within days. We’d be able to validate our ideas about the prototype and surface any major red flags before spending more development and design time on the final product.

We chose our method. Now it was time to make the plan and get started testing our analytics product, “Right Now Analytics.”

Phase 1: Planning & Goal-Setting

With our method chosen, it was now time to build out our overall plan and clearly state our goals. We like to use Tomer Sharon’s one-page research plan as a template for discovery and communication. It’s really helpful for getting everyone on the same page at the beginning of a project. It answers questions like:

Sharing a document like this prepares the wider team and sets their expectations for what we’ll get out of the research, and when we’ll know we’re done. Furthermore, it keeps all this communication lean, which is our theme for this type of research in the first place.

For our research projects, we’ve tested anything from rough wireframes to pixel-perfect clickable prototypes. In this case, the Data team built a live, unstyled version of the page (filled with real data!) for us to test. Since for this round of research we were looking to assess the value of the data itself, and make higher-level decisions about what should be included on the page, this rough, live-data prototype was a great fidelity choice.

Lessons learned

Make a plan. Set specific, clear goals. Be sure the entire team agrees to the plan. Ensure the subject and focus of the test are appropriate for uncovering answers to the questions at hand.

Phase 2: Targeting, Outreach & Coordination

This is where we encountered a hurdle that many public-facing product teams may not have to face. Between a customer relationship management tool, a product database, and multiple sources of analytics information, user databases within B2B-focused organizations can be varied and disconnected. In many cases, “Users,” “buyers,” and “customers” are distinct, and we don’t always know for sure that a user isn’t also a buyer in the middle of an upgrade process with a sales rep, or if a frustrated user already seeking support would be upset by an additional note from us asking them to test a product. Needless to say, this is tricky to navigate; to ensure the least amount of friction, we looked to account managers for insights on who to talk to (or not to), and when.

During this phase, our goal was to identify the right users to reach out to, and ensure that we weren’t going to damage or otherwise negatively affect a sensitive existing relationship.

This type of discovery takes time. Depending on how an organization is structured, it might take a journey across teams and multiple databases to gather the information needed — plus, getting the blessing of the account managers can happen quickly or take more time than one might anticipate.

Lessons learned

While it takes time and effort, understanding a user’s status within the larger system is critical before asking her to participate in user testing. Make that effort to ensure a user isn’t currently in a delicate or inopportune state before reaching out.

Phase 3: Conducting the Sessions

Our users were selected and ready for testing. We chose a pretty lean set of tools:

We set up our remote testing tool, invited our users, and explained to them exactly how the test would go.

During these real-time analytics prototype testing, some users also showed us the other analytics and real-time tools they use. This was particularly insightful because we could see the other tools our users rely on as they see them. This is a great example of the benefit of screensharing over other remote methods of collecting feedback.

Lessons learned

Don’t be afraid to guide the conversation back to your planned topics. It’s more important to listen and respond naturally than to capture everything in your notes. Also, it may sound silly and quite obvious, but always remember to hit “record,” (and pay attention to which screen the software is recording if you have a multi-screen setup)! We learned that lesson the hard way, and it’s always worth a gentle reminder.

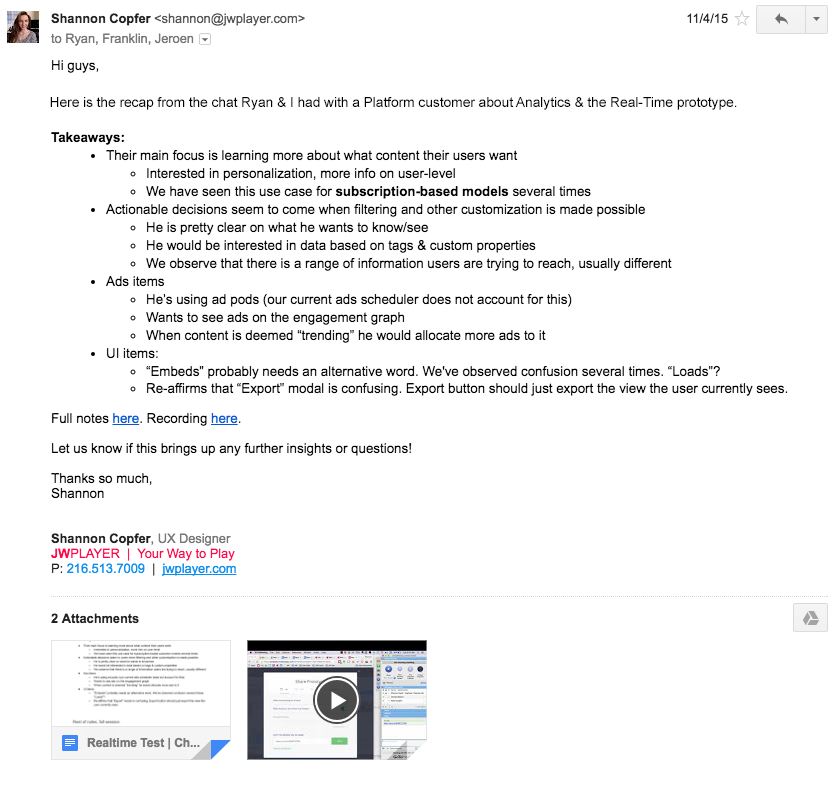

Phase 4: Debrief, Capture & Share

Once the user disconnected, the participants on our side chatted briefly about what we just learned. We pointed out anything that stuck out to us as interesting or noteworthy, and collect what we think are our biggest takeaways.

During the testing, we noticed that while users were enthusiastic about real-time data and had a lot of questions about what could be possible, in practice they seemed only to need a basic set of core real-time data to make decisions.

Additionally, some users who said they wouldn’t need real-time analytics showed us how they actually use Chartbeat (a real-time data tool) for some decisionmaking. This is another reason screensharing is so important; sometimes there’s a difference between what users say and what they do, a difference that only surfaces when researchers get in their world.

Lessons learned

There will be a lot to think about after a session, and it’s hard to remember it all even a day later. Debriefing right away helps to capture thoughts that perhaps didn’t get written down. It also helps to think over what a user showed versus what they talked about — often what they do is the real key.

Phase 5: Action Items & Beyond

After all the sessions were conducted, we went through our notes to look for patterns and overall takeaways. The product managers then, armed with this information, create the necessary user stories and plan with their engineering teams for execution.

While this testing wasn’t completed instantaneously, we were able to turn around impactful findings in a couple of weeks without a budget for research or travel. We did not inconvenience users by making them come to us, and took up the smallest amount of their time possible with our remote sessions.

We had enough validation to affirm that we could move ahead with the prototype as planned — that overall, users found it valuable and interesting. We learned that we needed to make some tweaks to the layout, to create a hierarchy of information that would make the most sense to these users. We also learned that users in certain types of customer organizations might not be interested in this tool, which gave us information to empower our messaging for later rounds of Beta tests with the actual product.

The data pipeline got polished, our wireframes were sent to design for final mockups, and eventually they were made into the ‘Right Now’ Analytics users see today.

Lessons learned

When communicating research findings, anecdotes can be as powerful as quantifiable data and other patterns. Choose reporting methods wisely and ensure the output is as impactful as possible for the audience who must act on it. Consider also making a supercut of interesting moments from the sessions. This is a great way to build empathy and get engineers and product teams connected with the people for whom they’re building solutions.

There will always be challenges in testing

Everyone encounters their own unique challenges and hurdles when planning and conducting studies. If there’s not ton of time or a ton of money for an “ideal” in-person research series, consider doing a few remote moderated testing sessions (the golden number is still just 5!). Depending on the fidelity of the designs or prototype being tested, it can answer a range of open questions about value, clarity, architecture, flow, and more.

Teams making the decision about testing will want to consider:

If our team could make this work, any team can do it — and so should have Richard Hendricks and Pied Piper. Here’s hoping that Silicon Valley season 4 brings us a more user-centric adventure.

UX research - or as it’s sometimes called, design research - informs our work, improves our understanding, and validates our decisions in the design process. In this Complete Beginner's Guide, readers will get a head start on how to use design research techniques in their work, and improve experiences for all users.